Line Following Robot

Skills: Image Processing, PID Control, Embedded Programming, OpenCV, Robotics, Rapid Prototyping

Project Summary

ME 433 capstone: Designed and built an autonomous 2-wheel robot that follows a line using real-time image processing and onboard control. Nearly all aspects—mechanics, electronics, software—were custom built, with a focus on robust computer vision and an effective PID-based drive system.

Mechanical Design

- Chassis optimized for fast prototyping—minimal structure, with attention to weight and simplicity.

- Oversized, 4.5-inch custom wheels with O-ring grooves for added traction; enabled higher theoretical speed, though processing bottleneck limited real track velocity.

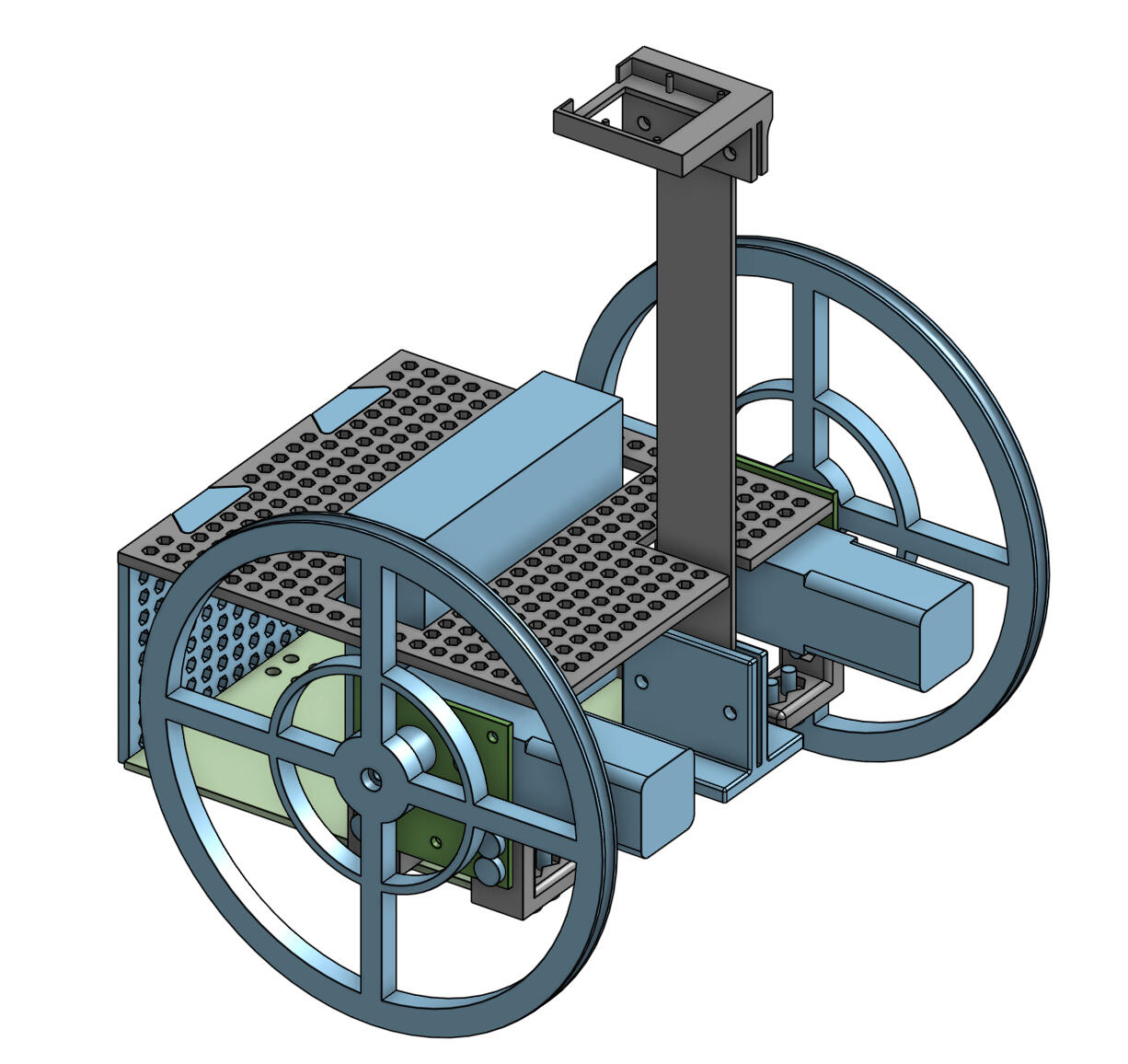

Mechanical design in Onshape CAD

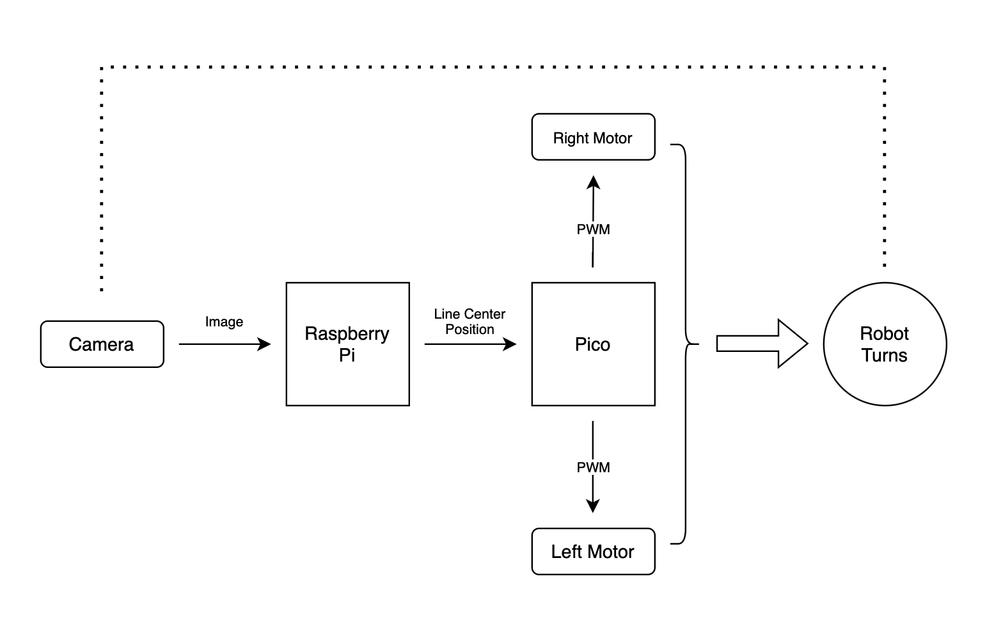

System Architecture & Controls

- Camera module streams video to Raspberry Pi Zero W, which handles image acquisition and vision processing.

- Processed line position is sent to a Raspberry Pi Pico running a PID controller for real-time PWM motor commands.

- Feedback loop: Each image yields a new line center estimation → PID calculates error → PWM adjusts motor speeds for steering.

Block diagram for line following robot control system

Image Processing Pipeline

- Efficient buffer streaming: Uses OpenCV.VideoCapture with buffer size 1 to ensure fresh frames.

Starting image

- Cropping: Slices the bottom 100 pixels of each frame (height 190–290, full width) for rapid processing and forward look-ahead.

Cropped image

- Preprocessing: Grayscale conversion, followed by Gaussian blur (\(3 \times 3\) kernel) for noise reduction.

Blurred image

- Thresholding: Binarizes image to isolate line (pixels above threshold = line).

Thresheld image

- Contour Extraction: Identifies all contours, selects the largest (the line), and computes its centroid using spatial moments.

Countour highlighted in green

- Single-Value Output: Outputs line center (0–639 px) as real-time control signal.

Simulated run of CV program, scanning from bottom to top a single image

Motor Control & PID

- Target setpoint fixed at pixel 320 (center). Error = 320 - current_center.

- PID controller (hand-tuned) outputs steering command to each motor as PWM values.

- Bias Compensation: Hardware asymmetry addressed by subtle PWM offset to slower motor.

- Output of controller is converted to PWM and sent to H-bridge to control motors.

Demonstration of control system reaction

Performance & Demonstration

- Robot completed a full lap in under 56.31 seconds.

Dual view of robot completing a circuit

What I'd Improve Next

- Smaller wheels for lower mechanical and control latency—improved handling of sharper corners.

- Remount motors so both drive the same direction, then allowing me to remove software bias workaround.